Deep Steward

Posted April 17, 2019 by Ian Ingram and Theun Karelse

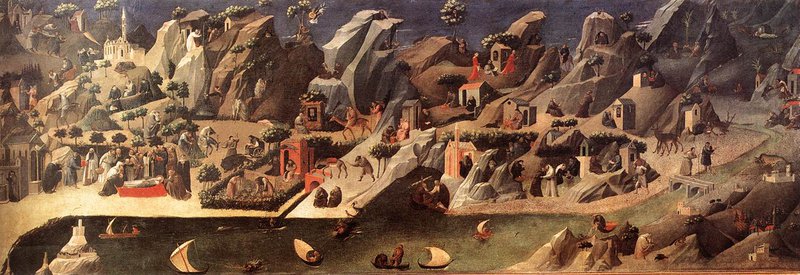

Unknown florentine The Thebaid ca 1410

When landscape appeared in European art it emerged first as a landscape of symbols. The Gothic depiction of Earth was populated with features that were primarily there as convenient symbols for a narrative. Some natural objects were treated realistically but many - like the absolutely fantastical mountain-formations depicted here in The Thebaid - are almost ideograms for mountains taken straight from Byzantine tradition. This is landscape seen over the shoulders of the main subject: humans (patrons) and biblical figures. A space where features are tagged placeholders in a larger narrative geography.

Van Eyck ca 1414 to 1417 in the Hours of Turin manuscript

With Van Eyck the environment first appears as a landscape of fact according to the eminent art-historian Kenneth Clarck. “In a single lifetime” - Clarck writes in Landscape into Art - “Van Eyck progressed the history of art in a way that an unsuspecting art-historian might assume to take centuries. In these first ‘modern’ landscapes Van Eyck achieves by color a tone of light that seems to already fully breathe the air of the Renaissance.”

At present, landscape is emerging in artificial minds through machine learning from domains such as precision agriculture, mining, forestry, transport and ecology. Some of the visual and conceptual similarities with early landscape painting are striking.

Theun Karelse - rendering

Until recently the ability to make sense of the environment was limited to biological beings, but machines are starting to blur those lines. Slowly the debate around machine intelligence is moving from human-centered preoccupations - like jobs security, privacy and politics - to the impact of these technologies on non-human lives. Similar to early landscape painting, machines gaze over the shoulders of humans into the background environment. In machine perception the environment seems to emerge as a landscape of commodity.

The Machine Gaze

Unlike the painting tradition, machine perceptions of landscape aren’t rooted in Byzantine art, but defined by platforms and training sets that humans provide. Leading image classifier platforms like Inception typically include a strange collection of species, including for instance many hundreds of dog breeds. These sets of animals and plants do not refer to any existing ecosystem, but are a hand-picked bunch of species that are of interest to humans in some way. When such an AI is introduced to a real-world terrain these pretrained sets prove about as relevant as cat-videos to an marine-ecologist. In fact when we turned the camera-eye to the direct surroundings of the Kilpisjarvi Biological Research Station during our Ars Bioarctica residency in the Finnish Arctic in 2018, it was looking out across a snow-covered terrain full of hundreds of birch trees, lichen-covered rocks and perhaps some passing birds, but when we asked the AI what it saw it told us it saw snowmobiles. There were none. It was hallucinating. It was hallucinating a landscape full of snow-mobiles. And perhaps more strikingly, it didn't see the trees.

The world view in these platform A.I.s is largely populated by human artifacts, ranging from snowmobiles, to vacuum-cleaners, and even guillotines. The worldview of technology isn’t neutral. Our landscapes however, are still full of trees before they are planks, rocks before they are architecture, water before it is Evian. So, it turns out cyborgs do not dream of electric sheep, but have much more commodified imaginations as platform A.I.s of late capitalism grow up human centered. With much of our current environmental predicament stemming from anthropocentric bias, this raises the question: should our machines learn exclusively from humans, should their natural habitat be corporate, or do intelligent machines need training-forests, like orphaned Orangutans in Indonesian rehabilitation programmes? Do the artificial agents that are currently taking seat in corporate boardrooms deserve to spend their weekends floating around coral-reefs, hike across mountains, or get stuck in a swamp? What if machines also learn directly from animals and plants?

Deep bestiary

In his explorations of a ‘parliament of things’ Bruno Latour leaves leaves the implementation up to others and there certainly remains the question whether a human can truly be an adequate representative of all the kinds of the things in such a parliament. Who or what can best vote in the interest of a birch, or, for that matter, for the air around it. Could an artificial agent be trained to see the world beyond an anthropocentric training sets? Could they - like Van Eyck - move beyond landscape of human symbols? If a machine is less confined to human classifications, would it come up with something radically different from Linnaean taxonomy? What features of natural phenomena would catch its attention and into what kinds of unknown bestiaries would it cluster them? We hope to explore such unknown worlds with the DeepSteward.

DeepSteward, an unsupervised field agent

In The Hamburg Manifesto, Karl Schroeder describes his concept of Thalience as; “an attempt to give nature a voice without that voice being ours in disguise. It is the only way for an artificial intelligence to be grounded in a self-identity that is truly independent of its creator's.” As a “successor to science” thalience proposes that we don't want machine copies of our own minds, we want to “give the natural world itself a voice”.

The DeepSteward is built by humans but left to interpret local trees, local plants, local animals, local geographcal features as it sees fit. This might include natural processes; geologic structures, symbioses, meteorological phenomena, hydrological systems. The emerging taxonomy and classifications may be very foreign or even unrecognizable to humans. It may form a breed of animism beyond our understanding. For the first testing the unsupervised DeepSteward will therefore be accompanied by a supervised ‘cousin’, a much more straight forward platform AI which is trained by humans telling them that a duck is a duck and a willow is a willow. Comparing results between the DeepSteward and its human-supervised side-kick may help us humans (audience) to understand or relate to the landscape of the DeepSteward.

Testing environment

For a first public field session the DeepSteward has been invited to Het Nieuwe Instituut to join the Zoöp experiment. Central to Zoöp are the two large ponds at Het Nieuwe Instituut and the garden. The experiment explores a Decentralised Autonomous Organisation based on biological capital instead of financial capital and experiments with the kinds of infrastructure that might make humans, non-humans organisms and machine intelligences equal partners in the venture. In the Zoöp experiment the DeepSteward is invited as an unsupervised informant.

Zoöp runs as part of the Neuhaus exhibition from 19/05 until 15/09 at Het Nieuwe Instituut.

DeepSteward is supported by Stimuleringsfonds.

Created: 15 Jul 2021 / Updated: 27 Sep 2022