Woven robots for dancing together during a pandemic

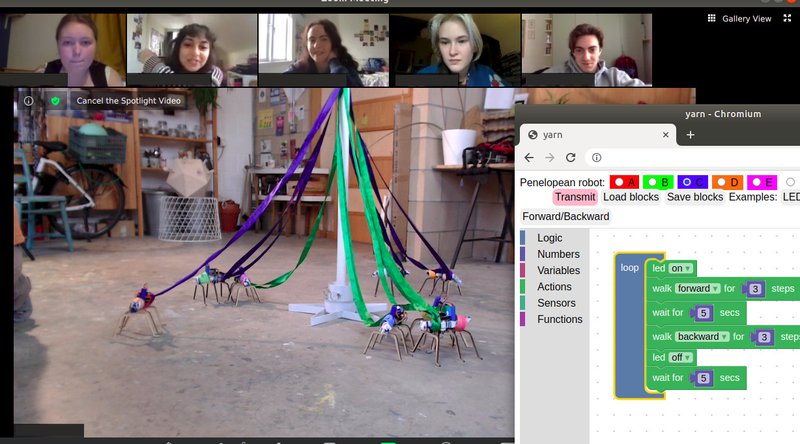

Posted Feb. 24, 2021 by Dave GriffithsWe've repurposed our maypole robots so people can dance together safely (woven robots don't need to social distance).

In preparation for the upcoming PENELOPE exhibition (watch this space) we've been following a few threads lately with the Penelopean weaving robots. In keeping with making everything tactile and tangible, we were planning on getting them to follow lines on the floor built up by tiles - a kind of tangible programming language for braids.

The coronavirus pandemic and it's shift of focus to online streaming, plus a request for a workshop in February 2021 meant we could try something new. The robots all run their own independent programs (in a custom language called Yarn) broadcast over radio, but the livecoding potential hadn't yet been explored.

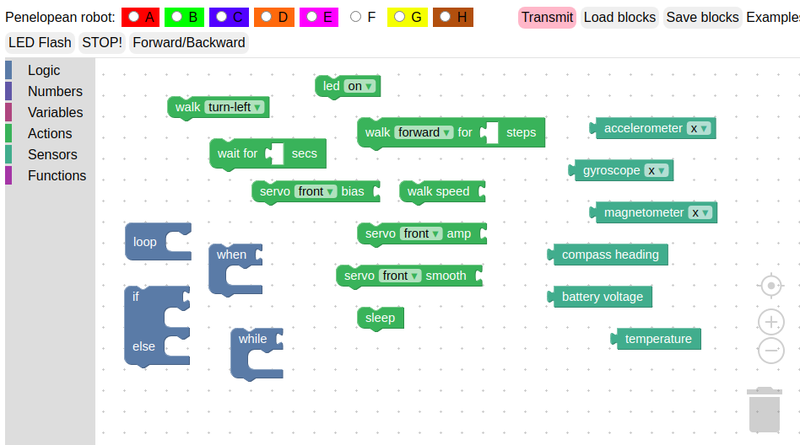

In order to make the (Lisp based) Yarn language more accessible, and editable online - we adapted Google’s Blockly, a visual drag-drop programming system to generate Yarn Lisp code. This is served online by a Raspberry Pi which is fitted with the same radio module (NRF24L01) the robots have.

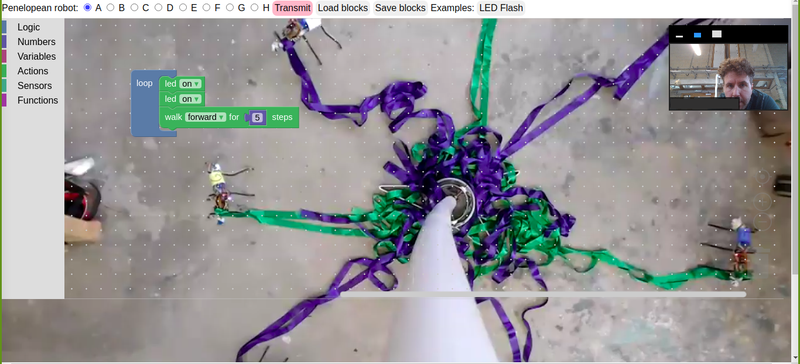

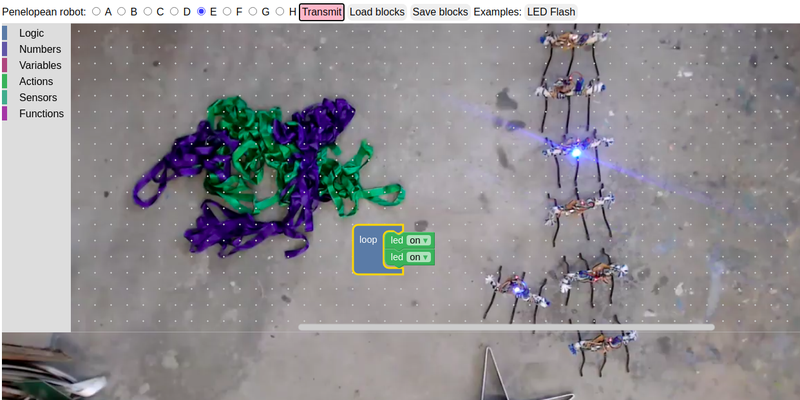

(View from a camera mounted on the maypole - with the code overlaying the video image. LED flashing is useful to check you are talking to the robot you think you are)

We set up a maypole in our studio, rigged up some cameras and decent lighting and attached markers to the robots so they could be easily be picked out on the video feed by colour and letter. The interface provides a way to pick which robot you want to pair with (we assigned the pairs beforehand) and it gives you feedback if there is a problem communicating (e.g. if it's out of range). With the video latency taken into consideration, an important aspect here was to reduce the send - compile - transmit - acknowledge loop to under a second, even with eight people using it at the same time. The effect is perhaps a bit like sending code to a robot on a different planet.

(All the logic, action and sensor blocks)

In previous performances all the robots were running the same program, which means the maypole braid (tangle) pattern produced were fairly uniform twists, even with people interacting and moving the robots around. With this individual livecoding setup, the dance and resulting tangle is much more messy and takes longer to disentangle. The element of music (and poetry) in the original maypole performances was missed by the participants, but they managed to work out that it was possible to make music themselves by using the base of the maypole as an instrument.

We started out with three different type of program, all available as pre-built examples you can drag in - one was a simple LED flasher, which is useful to check you are sending code to the robot you think you are. The second is like a remote control - simply setting the current walking pattern (to forward, backward, various turning options or emergency stop). The third is where we get into more interesting territory - sequencing a certain number of steps forward and back, waiting for a time and repeating moves. After a while of playing with these, we introduced more commands - for example, controlling the speed of the movements and the individual parameters of the leg servos. This became useful for tuning programs for their individual robots, as they all naturally have distinct characteristics.

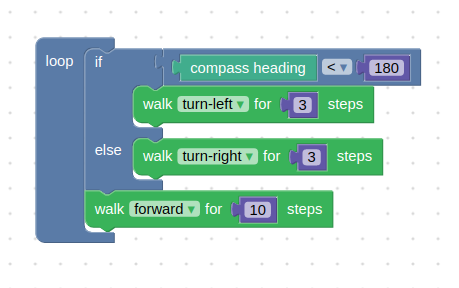

(An example program that uses the magnetometer sensor to make the robot gradually walk in a southerly direction).

My job during all this was to run around picking them up when they fell over - this does not happen quite so much as it used to, but they needed a bit of manual untangling after a while. We kept them running for each group for about an hour and a half, and I found it interesting that the connection we've see during in-person performances remained between the human and the robot. One of the robot's feet mysteriously fell off giving one a distinct limp. I offered to switch them to a different one, but they felt sorry for it and wanted to keep it. It was interesting that they managed to keep track of their individual robot, too once they got their eye in.

In regard to teaching programming (none of the students had programmed before) I felt that it may have been important that I could not see their code - this meant there was no pressure on them from me walking around checking if they were OK, and it felt relaxed and slower. More significantly, I find a situation where you are programming something in a physical (if remote) environment has a lot of benefits. To start with the code seems complex and the world is familiar, but as we progressed the code, networking and radio infrastructure required to make everything work became simple and predictable, almost disappearing in comparison to the physical world, the forces and tangles involved. Coming back to the Penelope project, and our initial aim to re-imagine technologies that would have arisen if the Ionian Greeks had invented the computer - I feel that this livecoding experiment in the woven cosmos is something that they would not had much trouble understanding.

Created: 15 Jul 2021 / Updated: 23 Oct 2021